Using AI methods and computational tools to mitigate tariff uncertainty

Podcast-style summary by NotebookLM

Introduction to the business case

This time, we are inspired by two articles that focus on how the recent wave of U.S. tariffs has created significant uncertainty for global supply chains and how AI can help address these challenges. One of the articles is from The Wall Street Journal (WSJ) and the other is from CNBC. You can read the WSJ article "AI Can’t Predict the Impact of Tariffs — but It Will Try" here and the CNBC article "Companies turn to AI to navigate Trump tariff turbulence" here.

Sudden tariff increases on imports from China, Canada, and Mexico have forced companies to quickly reevaluate their sourcing strategies, inventory levels, and supplier relationships. Although machine learning methods and AI-powered tools can help companies analyze risk, model tariff scenarios, and optimize logistics, these tools often struggle to predict the impact of abrupt and rare changes in the environment. This is because such changes often lack historical references that can be used to train the models and generate usefully accurate predictions.

Both articles explore AI's potential for addressing tariff-related uncertainty. The WSJ piece introduces digital twins as a solution, while the CNBC article highlights rapid modeling of changes in tariff policies in simulations. Even though AI is defined so vaguely in both articles as to be nearly meaningless, both fundamentally discuss simulation-based computational approaches. Inspired by these discussions and the solutions introduced in the articles, we ask:

How can we plausibly mitigate tariff uncertainty using computational tools?

Academic's take

Tariffs are arguably the most impactful policy change affecting all businesses in recent history. Both the WSJ and CNBC articles pose an interesting question about estimating the effects of tariffs. Both articles vaguely use the term "AI," though they clearly refer to some kind of predictive modeling. The main question then becomes whether the downstream effects of tariffs can be estimated using data — that is, can this problem be modeled, and how?

This problem domain falls outside my area of expertise. While many economists have produced extensive research on tariff effects, as my very brief literature review showed, I did not find any studies that approach the modeling challenge in the way I would frame it. So I saw value in this high-level reflection on the two articles.

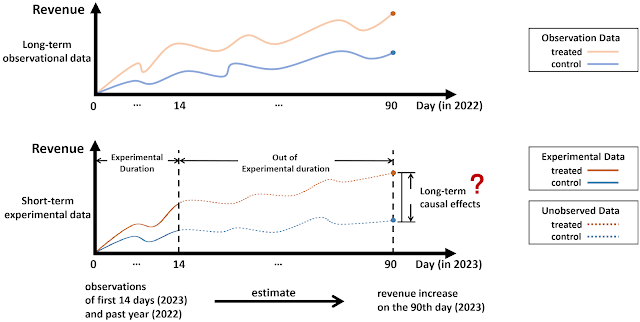

Assuming the tariff problem can be usefully modeled, we need to take a step back and ask how we should model it. To start, is this a predictive or a causal problem?

The tariff problem appears ill-suited for predictive modeling due to limited historical data. So, what's the best way to address this problem? A causal framework seems most appropriate, given that tariffs are policy interventions. In the absence of observational data, however, we may need experiments to estimate the effect. But applying tariffs solely to measure their effects would be too disruptive (though one might wonder if we are currently witnessing such an experiment). Then what else can we do? What computational tools are available for problems where historical data is missing, experiments are not feasible, and the many interconnected components create complexity?

Perhaps the solution lies in running experiments within synthetic worlds to simulate and approximate data centricity. If I modeled this problem, I would focus on causal modeling of tariffs using reinforcement learning (i.e., designing and running experiments via RL). The AI Economist exemplifies this type of solution; you can view a video introduction here. Other ongoing research projects, such as those on Generative Flow Networks (GFlowNets), show promise that innovative use of RL agents could also lead to generative models capable of learning causal relationships. For more on the use of RL agents for causal inference, see Bengio et al. (2023) and Deleu et al. (2023).

In economics, there is clearly a growing interest in using reinforcement learning for policy solutions. This repository is the most comprehensive list I know of that applies RL in economics, with several studies focusing on macroeconomic policy interventions. The list doesn't yet include any studies focusing on the implications of tariffs, but there may clearly be ongoing research that has not been made public yet. We'll have to wait and see.

More interestingly, the technology providers and "AI companies" featured in both the WSJ and CNBC articles fail to consider reinforcement learning as a viable computational solution for the tariff problem. They discuss simulation concepts like digital twins and automated scenario planning, which the Director covers next, while missing RL.

Director's cut

In an ever-changing, uncertain environment, can AI "predict" the impact of tariffs on supply chains? The short answer is: no, it cannot. Any predictive model trained on historical data will perform well as long as history repeats itself. If the data deviates from historical observations, predictive models will start to fail.

We thought we had learned our lesson during Covid-19. As all demand forecasts failed, and with the Spanish Flu as the only historical precedent, we set aside our predictive models and instead observed how the environment was unfolding. Learning rapidly and pivoting fast became key, and companies with a better grasp of their data learned faster. We thus needed to understand the key drivers of the environments we faced. Amidst labor and manufacturing shortages, border closures, and port congestion, we found ourselves reacting to daily challenges, building algorithms to reallocate limited inventory and optimize store and distribution center efficiencies. With the tariffs currently unfolding, I ask myself: Have we truly learned our lesson? Do we really have all the information needed to navigate this environment? What additional data would we need to learn swiftly and more effectively?

To address these critical questions, we must turn to solutions like digital twins and simulations, moving beyond attempts to predict the unpredictable, as highlighted in the articles from The Wall Street Journal and CNBC.

A digital twin, a virtual representation of a real-world system or process, offers the precise "additional data" and insights needed to analyze and respond to changes in this turbulent environment. It not only mirrors the current state and its bottlenecks but also empowers users to run simulations and assess the downstream implications of various scenarios. For example, in an airport setting, a digital twin could help analyze whether opening an additional check-in kiosk would inadvertently increase waiting times at the security (TSA) lines.

While simulation methodologies have been fundamental to fields like operations research for decades, the transformative element today lies in the extraordinary scale and diversity of data resources coupled with the availability of computational power. Leveraging this expanded capability, if I built a digital twin to analyze the impact of tariffs on assortment or pricing decisions, I would probably focus on simulating the following scenarios:

- If the increased costs of products sourced directly or indirectly from China are passed on to consumers as higher prices, would demand shift, and if so, to which specific products?

- Does my competition offer the same assortment and prices? If so, what is their market share? If they were to absorb the cost increase without raising prices, how much demand would I lose?

- If demand shifts to alternative products, can my suppliers meet the increased demand? Or would they also raise their prices to balance supply and demand

- Ultimately, what is the optimal pricing strategy? Should the cost increase be partially or fully passed on to consumers, and should prices be raised across the entire category or only for the affected products?

References

- Bengio, Y., Lahlou, S., Deleu, T., Hu, E. J., Tiwari, M., & Bengio, E. (2023). GFlowNet Foundations. Journal of Machine Learning Research, 24(210), 1-55.

- Jain, M., Deleu, T., Hartford, J., Liu, C. H., Hernandez-Garcia, A., & Bengio, Y. (2023). GFlowNets for AI-driven Scientific Discovery. Digital Discovery, 2(3), 557-577.