Can GenAI accelerate the adoption of optimization?

Podcast-style summary by NotebookLM

Solo post: Director's cut

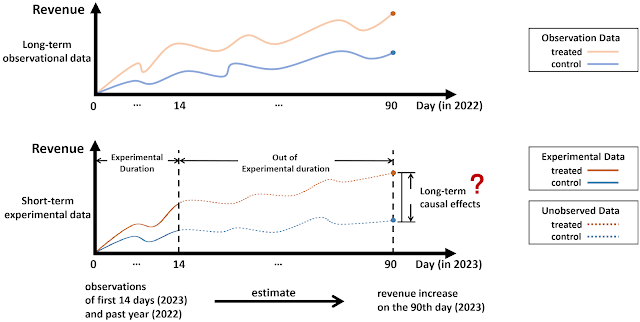

The title, "Democratizing Optimization with Generative AI," reflects the approach of a recent paper that investigates why businesses fail to adopt advanced optimization models and assesses whether Generative AI (GenAI) can help bridge that gap.1 This is interesting. The authors argue that Generative AI (GenAI) can:

- Offer an intuitive layer to provide visibility into the inputs (Insight),

- Make the model logic and constraints transparent (Interpretability), and

- Rapidly respond to change and create what-if scenarios (Interactivity and Improvisation).

Most of the insights shared in the paper resonated with my own experience building and deploying optimization models. Optimization has always been too opaque for the teams who would benefit from it the most. Why? Because translating a messy, unstructured problem into a precise mathematical formulation creates black-box layers. On the other hand, business leaders tend to reason in narratives and trade-offs, not mathematical models. Without transparent rationales, a manager will often revert to simple heuristics rather than acting on an optimization model's seemingly black-box recommendation.

Even though the 4I Framework offers guidance on how to use GenAI to make some of the inputs and mathematical assumptions more accessible, other questions from business leaders remain unanswered. Some of these questions data science and optimization teams frequently receive are as follows:

- Why did the model recommend A and not B?

- What should I change in the model to get B as a recommendation?

- How robust is this recommendation? If something changes in my environment, would you continue to recommend A?

I will dive into the reasoning behind each question, explain why they are difficult to answer, and conclude with the research being done behind the scenes that could potentially address them.

Why did the model recommend A and not B?

Assume we have an optimization model that recommends optimal Policy A over Policy B. Business leaders often want to understand the rationale behind the model's recommendation, especially when it deviates from historical implementation ("Why Policy A but not B?").

From a modeling perspective, the answer is clear: the objective function leads to Policy A under the defined constraints (assuming the solution is a global optimum). To explain this choice and the resulting recommendation, we typically demonstrate which constraints Policy B would have violated and what its resulting objective function value would have been. This serves as a counterfactual explanation based on the mathematical structure, but it isn't a systematic way to probe the model for all relevant alternatives.

Studies tackling this question

To more systematically analyze the impact of constraints and report the counterfactual outcomes, we could integrate explainable techniques directly into the optimization structure. In addition, highlighting which constraints and values contributed most to the final recommendation can help make the model less of a black box. Two notable approaches include:

Argumentation for Explainable Scheduling (ArgOpt)- This approach puts a smart "translator" between a scheduling model and its user. It uses logical arguments to explain why a schedule is practical and efficient. It can also reject a user's "what-if" suggestion by explaining the exact reason why it won't work. For example, if a hospital manager suggests a new nurse schedule, the system can analyze it and report back: "This schedule is not possible because it assigns Nurse A to a task they are not certified to perform."

- This provides structured, logical explanations for the optimality of the result. Details are here.

- This paper introduces a method to explain counterintuitive optimization strategies. By comparing the algorithm's recommendation against a plausible alternative, the method isolates the exact reasons the recommendation is superior. For example, if a transportation optimization model suggests a longer shipping route over a shorter one, the explanation first breaks down each route's total cost into core components like fuel, tolls, and potential delay costs. It then creates a contrastive explanation by comparing the recommended route against the direct alternative, acknowledging that the alternative is indeed better on fuel and tolls. Finally, it isolates the minimal factor justifying the decision: the chosen route's higher reliability prevents potential delays that are far more costly. This way, the method doesn't just state what the best choice is; it explains the specific trade-offs that make it the winner.

- This method helps business leaders see not just what is recommended, but also why other options were not chosen. Details are here.

What should I change in the model to get B as a recommendation?

This is a prescriptive "what-if" query that requires working backward from the expected outcome (Policy B) to the inputs necessary for that outcome. Business leaders asking this often look for specific, actionable levers to pull, such as adjusting objective function coefficients (e.g., lower cost) or constraint values (e.g., higher capacity), to make B optimal.

Studies tackling this question

This central problem is solved by techniques like counterfactual explanations and inverse optimization. Both focus on finding the minimal changes necessary to achieve a desirable outcome.

Counterfactual Explanations for Linear Optimization- This research calculates the minimal change to a model's input parameters needed to make a desired "Policy B" the result. It considers what's required to make that policy optimal, or, in a more flexible case, what's needed to make it feasible and nearly as good as the original solution.

- This method provides business leaders with the most direct, minimal actions needed to change the decision. For example, instead of just telling a supplier their bid was too high, this method could tell them, "If you reduce your price by $0.15, your bid will be accepted." Details are here.

- This inverse optimization method calculates the minimal change in the objective function's inputs required to make a specific alternative (Policy B) the optimal policy. For example, in a delivery scheduling problem, a manager might ask why a key customer, Client B, is not served before noon. The explanation process defines this desired outcome and isolates the smallest adjustment needed: "To ensure an early delivery for Client B, their priority score must be increased from 7 to 9."

- Armed with this information, business leaders can proactively adjust their priorities or inputs. Details are here.

How robust is the recommendation? If something changes in my environment, would you continue to recommend A?

This is a question about the sensitivity of the optimal solution. Business leaders often ask for the range within which the solution remains valid. They need confidence that their decisions aren't based on razor-thin mathematical assumptions that small fluctuations could destroy.

To answer this question, many optimization solvers can calculate the allowable increase and decrease for every objective function coefficient and constraint value. These bounds define the range before the current optimal solution changes, which is essentially a sensitivity analysis.

The following approaches can be used to generate robust optimal policies under uncertainty:

- The source of uncertainty may be independent of the decision made. For example, in an emergency room scheduling problem, the number of patients arriving is uncertain. When the source of uncertainty is independent, optimizing over a family of probability distributions (rather than a single distribution or worst-case set) can provide directionally robust answers.

- Alternatively, the source of uncertainty may depend on the decision made. For example, the demand fulfilled by a distribution center is influenced by its available inventory. Even though these problems are tackled theoretically, real-life applications often lean on reinforcement learning and experimentation for computational tractability.

Studies tackling this question

Beyond sensitivity analysis, some approaches integrate robustness directly into the model design.

Robust Interpretable Surrogates (Inherent Robustness)- Instead of probing sensitivity post-hoc, one approach is to use frameworks that create inherently robust policies. This paper demonstrates how to build decision tree policies that are robust against parameter changes. Unlike standard machine learning classification trees that output predefined labels, these decision trees generate actual solutions to optimization problems. The key innovation is building these decision trees that withstand data errors, so that if measurements are slightly off or information is distorted, the recommendations are still optimal. For example, a standard model might recommend a route when a toll is under \$5.00. This method, however, adjusts for uncertainty by setting a safer threshold, such as \$4.75, creating a buffer that ensures the decision holds up even if costs fluctuate.

- Using this method, business leaders can gain an easy-to-follow decision framework that continues to perform well under uncertainty, improving stakeholder trust and adoption of optimized strategies. Details are here.

Bottom line

In the quest to make optimization a little less black box, GenAI may excel at translating code and mathematical formulations to human language. This can help with validating basic assumptions or underlying data. However, GenAI will likely fall short when business leaders ask the questions that challenge and contrast optimal policies and model inputs.

Emerging research in explainable AI for optimization has started to fill these gaps. As these methods mature and are embedded in commercial tools, optimization may shift from a specialist's domain to a collaborative decision making process. The path forward may lie in combining GenAI's conversational strengths with rigorous mathematical explainability methods.

Implications for data centricity

Data centricity is staying true to the data. With rising demand for GenAI, business leaders seek to get closer to the data by probing the inputs and the underlying mathematical assumptions. As the gap between data and insights widens due to the black-box nature of the analytical steps, questions arise about how strong the linkage really is. Business leaders want to know whether decisions remain faithful to what the data actually show: if we change our assumptions about the data or the mathematical problem formulation, do our results change accordingly, and in what direction?

Explainable optimization methods contributes to data centricity by bridging the gaps between raw data, model structure, and business outcomes. By demystifying the reasoning behind model choices through counterfactuals, constraint-based narratives, or value decomposition, teams can trace outcomes back to specific data features or assumptions. This enables business leaders examine how sensitive a recommendation is to data quality issues or missing data. Data centricity is not only about interpretability, but also accountability, as recommendations are stress-tested under changing conditions to ensure models are grounded in data.

Footnotes

[1] Simchi-Levi, D., Dai, T., Menache, I., & Wu, M. X. (2025). Democratizing optimization with generative AI. Available at SSRN 5511218.↩